Subtitled 'The Hidden Rules of English Behaviour' this book purports to tell us why we are like we are -if you're English that is, sorry I shouldn't have assumed that. We do apologise a lot, something to do with our social dis-ease and hypocrisy -see, it's true, I wasn't really sorry.

The main fun that comes from the book is compare your own behaviour -and that of your friends, significant others and work colleagues with the behavioral rules laid out in the book. Kate has cunningly played to the English preoccupation with class by giving codes of behaviour for the various classes under each section. The other half lets herself down with a liking for napkin rings and coasters.

She also attempts to put us into context by comparing us to other nations, for instance picking on our dislike (unless we're from Yorkshire -but the north is another country) of talking about money and our ubiquitous use of humour.

The book is engagingly and amusingly written, but the style can pall and one can get fed up with being called a hypocrite and social autistic, so it is probably best kept as a 'toilet book' -oops 'lavatory book' my lower-middle class slip is showing.

'A Bucket of Sparks', 'Tartan Paint' or 'A Long Weight' all things that the innocent gets sent for to waste his time -seemed apt.

Sunday 30 January 2011

Tuesday 25 January 2011

Recusrsion -tree walkers

There was these tree walkers fell off a cliff. Boom, boom -splat! I'll get my coat.

Much data in computers (including this web page) is stored in tree (or graph, a tree is a particular kind of graph) structures.

In the diagram above the <html> element is the parent of the <head> element and the <title> element is one of the children of that <head> element.

So how do you find elements in a tree? Say I want to do something to all the <p> paragraph tags (which are at different levels) , the only way I can manipulate them is to check every element and see if it's a <p> tag and then do what I want to do.

Recursion is a classic method of walking a tree elegantly and with a minimal knowledge of the size and configuration of that tree. Recursive functions are ones that call themselves again and again to process data -but (and it'd a big but, bigger than Mr Creosote's) they know when to stop. A simple algorithm to process <p> elements might look like :

func find_p($element) {

if($element->name == 'p') {

do_stuff()

}

while($child = $element->get_child()) {

find_p($child);

}

}

You would kick this off by calling it with the <html> element, it's not a <p> so it won't do any processing, just get all of <html> element's children in turn and call itself for each of them.for each of those children it will check if they are a <p> and then get each of their children....

If an element has no children then the function just returns, effectively going back up a level.

Hopefully you can see that the function will go down the tree left side first, so <html> -> <head>-><title>, <title> has no children so the function returns to the calling while loop and <head>s next child <link> ...

At no point in the program have I had to know how many children an element has or how wide or deep the tree is.

You can see recursion in action in the Biomorph program where the method draw_morph() calls itself recursively to create the branching morphs.

NB in the real XML/HTML world you will probably find that the hard work is done for you, if I wanted to process all the <p> tags in a document I'd perhaps use XPath . If you do need to parse an XML document element by element (and I've had to) then recursion would be the way to go.

A word of warning, recursion can be resource heavy, all those function call will reserve resources that won't be freed up until the function returns, if you have a lot of depth and are passing around a lot of data the effect can be significant and your computer could freeze.

Much data in computers (including this web page) is stored in tree (or graph, a tree is a particular kind of graph) structures.

| Tree Structure of an HTML Page |

In the diagram above the <html> element is the parent of the <head> element and the <title> element is one of the children of that <head> element.

So how do you find elements in a tree? Say I want to do something to all the <p> paragraph tags (which are at different levels) , the only way I can manipulate them is to check every element and see if it's a <p> tag and then do what I want to do.

Recursion is a classic method of walking a tree elegantly and with a minimal knowledge of the size and configuration of that tree. Recursive functions are ones that call themselves again and again to process data -but (and it'd a big but, bigger than Mr Creosote's) they know when to stop. A simple algorithm to process <p> elements might look like :

func find_p($element) {

if($element->name == 'p') {

do_stuff()

}

while($child = $element->get_child()) {

find_p($child);

}

}

You would kick this off by calling it with the <html> element, it's not a <p> so it won't do any processing, just get all of <html> element's children in turn and call itself for each of them.for each of those children it will check if they are a <p> and then get each of their children....

If an element has no children then the function just returns, effectively going back up a level.

Hopefully you can see that the function will go down the tree left side first, so <html> -> <head>-><title>, <title> has no children so the function returns to the calling while loop and <head>s next child <link> ...

At no point in the program have I had to know how many children an element has or how wide or deep the tree is.

You can see recursion in action in the Biomorph program where the method draw_morph() calls itself recursively to create the branching morphs.

NB in the real XML/HTML world you will probably find that the hard work is done for you, if I wanted to process all the <p> tags in a document I'd perhaps use XPath . If you do need to parse an XML document element by element (and I've had to) then recursion would be the way to go.

A word of warning, recursion can be resource heavy, all those function call will reserve resources that won't be freed up until the function returns, if you have a lot of depth and are passing around a lot of data the effect can be significant and your computer could freeze.

Sunday 23 January 2011

Biomorphs - software genetics in HTML5 and Javascript.

'Pull the switch Igor -we're frying tonight!' Today we're going to create artificial life MWHAHAHA! Well not really, but we are going to have a play with genetics and software development, less fun than lightning and a methane atmosphere -but quicker and easier to arrange than a suitable planet.

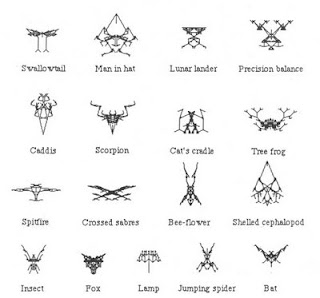

Richard Dawkins describes biomorphs in his book The Blind Watchmaker (chapter 3). Biomorphs are shapes that change by mutation and it is possible to build up creatures by selecting from the generated shapes. Pretty much a game really, although the book does carry a good description of n-dimensional spaces (9 in this instance) -maybe I finally get it.

As an exercise in brushing up my Javascript -and playing with HTML5 I am going to have a go at creating a version of his concept. The reason for Javascript and HTML5 is that I can host a page with the program on this blog and not have to set up a server somewhere.

In Dawkins original a biomorph is a tree structure that has 9 genes controlling its growth, these genes control things like stem length, branch angle, number of iterations of breeding &c.. There isn't an algorithm as such in the book so this will be an 'Agile' (or suck it and see) development and I'll basically solve small problems and hope that I can bolt them all together for an overall solution.

Finally, as Biomorphs are tree structures the natural algorithm to draw them will be recursive, it'll be interesting to see if Javascript and the browsers can handle that.

The program will appear on a separate page available from the top menu, our first design decision!

|

| Example Biomorphs |

Richard Dawkins describes biomorphs in his book The Blind Watchmaker (chapter 3). Biomorphs are shapes that change by mutation and it is possible to build up creatures by selecting from the generated shapes. Pretty much a game really, although the book does carry a good description of n-dimensional spaces (9 in this instance) -maybe I finally get it.

As an exercise in brushing up my Javascript -and playing with HTML5 I am going to have a go at creating a version of his concept. The reason for Javascript and HTML5 is that I can host a page with the program on this blog and not have to set up a server somewhere.

In Dawkins original a biomorph is a tree structure that has 9 genes controlling its growth, these genes control things like stem length, branch angle, number of iterations of breeding &c.. There isn't an algorithm as such in the book so this will be an 'Agile' (or suck it and see) development and I'll basically solve small problems and hope that I can bolt them all together for an overall solution.

Finally, as Biomorphs are tree structures the natural algorithm to draw them will be recursive, it'll be interesting to see if Javascript and the browsers can handle that.

The program will appear on a separate page available from the top menu, our first design decision!

Thursday 20 January 2011

CodeIgniter PHP Framework.

As part of a job hunt, I am having to take a look at CodeIgniter, a PHP development framework. The idea of a framework is that it saves you time by already implementing a lot of the basic code that would write for every application, yet doesn't constrain you as much as using a CMS like Drupal would.

I have looked at several frameworks in the past and developed projects using a couple of them Zend and Rails. Zend is quite good in that you can treat it as either a library or a full blown MVC framework. Rails is also good for prototyping sites and web developments -but if you want to get a fairly vanilla website up and running quickly then a CMS like Drupal would probably be first choice (perhaps followed up with a bespoke/framework version of the site for version 2)

Anyway, CodeIgniter -seems to be popular and bills itself as 'light weight' -not something you could accuse Zend of. It's an MVC system, that says you don't need a templating language or, necessarily a database!

Installation

I'm trying this on Fedora Linux running under VMWare on my laptop. Interestingly the minimum PHP requirement is 4.3.2, although it runs under PHP 5 -which has been out for several years now.

Initial installation was pretty simple, unzip the file in your web root and edit the config as per the instructions (n. b. the config file path should be system/application/config/config.php from the installation folder). That's it -you get a welcome page. Obviously there is no database integration at this stage.

What do you get?

Initially a View and a Controller -but no model; fair enough we haven't an app yet. Take a look at the feature list, there's nothing there that's particularly outstanding looks like an MVC system with some bog standard libraries, most of which seem to be available in native PHP or PEAR/PECL; perhaps that's why there seem to be quite a lot of articles on using bits of Zend Framework within CodeIgniter.

Out of the box the URLs all contain index.php, e.g. http://localhost/CodeIgniter/index.php/blog/. I don't like this, but it is easy enough to remove using an Apache rewrite rule.

Controllers

These seem to work much as one would expect, and support a folder structure which should make for good code structure. For instance you should be able to have http://site/blog/post and site/blog/list in different files in a directory called blog, rather than lumping them into one big blog controller. Parameters are passed raw rather than as nvps (as far as I can see). There are pros and cons to this, the pro being shorter URLs the con being that it's less obvious from the URL what the function arguments are. Given the dynamic (ok chaotic) nature of most websites I can see bugs arising. Oh yeah -they live in the controller folder.

Views

Live in the view directory! Whilst there is a templating language, this seems to be regarded as unnecessary and, from the manual, PHP is used directly. Fine by me, PHP is a templating language. It seems that views have to be called explicitly. Boring, I like the default that says if I have a 'post' controller I'll call a 'post' view by default, if it exists; but I realise that this is just syntactic sugar. Beyond that it's just standard PHP, front end programming, data can be passed from the controller to the view as an array (of however many dimensions) just like Smarty so that's all good. You can also call multiple views from one controller function, thus mimicing Zend layouts, but this is starting to push display logic into the controller, breaking the MVC pattern.

Models

These seem to be a bit of an after thought and are described as optional. As an ageing database hacker the model is always where I start my development and design from, as it embodies the essence of an application and forces you describe the things that you are dealing with. So one black mark there. Models implement a cut-down Active Record pattern, table aren't implemented as classes (as per Rails and Zend). I think this is a shame as you lose some of the name-based goodness that you get with the one table/class model (meta model?). On the other hand there is a quite steep learning curve when it comes to more complex queries and the Rails/Zend version.

Models are abstracted from the underlying database engine, as it's PHP4 compatible I'm guessing not through PDO. You may need to still understand the underlying database though, i.e. transactions aren't going to work on a MySQL MYISAM table.

A couple of nice features are method chaining with PHP5 to produce readable code :

Transactions, meta data and adapter function calls are all supported.

And there's more!

There are the usual helper, plugin and library mechanisms. There are limited hooks to allow you to call your own code within the control of flow through CodeIgniter. There's an AutoLoad mechanism, although the description sounds more like a pre-load, so not on-demand like Zend. It would seem that scaffolding has been dropped. You can override the routing and perform output caching.

What do I think?

That I'm knackered now. I think I might like CodeIgniter, it seems less heavyweigh than the tZend Framework and easier to get to grips with yet still retains most of the essentials. If you are desperate for a bit of Zend (maybe Lucene Search) my guess is that you could still use it from within CodeIgniter (although I think that I'd run Solr and write a class to talk to that -if there isn't one already).

So CodeIgniter, my first framework, and maybe you won't ever grow out of it.

I have looked at several frameworks in the past and developed projects using a couple of them Zend and Rails. Zend is quite good in that you can treat it as either a library or a full blown MVC framework. Rails is also good for prototyping sites and web developments -but if you want to get a fairly vanilla website up and running quickly then a CMS like Drupal would probably be first choice (perhaps followed up with a bespoke/framework version of the site for version 2)

Anyway, CodeIgniter -seems to be popular and bills itself as 'light weight' -not something you could accuse Zend of. It's an MVC system, that says you don't need a templating language or, necessarily a database!

Installation

I'm trying this on Fedora Linux running under VMWare on my laptop. Interestingly the minimum PHP requirement is 4.3.2, although it runs under PHP 5 -which has been out for several years now.

Initial installation was pretty simple, unzip the file in your web root and edit the config as per the instructions (n. b. the config file path should be system/application/config/config.php from the installation folder). That's it -you get a welcome page. Obviously there is no database integration at this stage.

What do you get?

Initially a View and a Controller -but no model; fair enough we haven't an app yet. Take a look at the feature list, there's nothing there that's particularly outstanding looks like an MVC system with some bog standard libraries, most of which seem to be available in native PHP or PEAR/PECL; perhaps that's why there seem to be quite a lot of articles on using bits of Zend Framework within CodeIgniter.

Out of the box the URLs all contain index.php, e.g. http://localhost/CodeIgniter/index.php/blog/. I don't like this, but it is easy enough to remove using an Apache rewrite rule.

Controllers

These seem to work much as one would expect, and support a folder structure which should make for good code structure. For instance you should be able to have http://site/blog/post and site/blog/list in different files in a directory called blog, rather than lumping them into one big blog controller. Parameters are passed raw rather than as nvps (as far as I can see). There are pros and cons to this, the pro being shorter URLs the con being that it's less obvious from the URL what the function arguments are. Given the dynamic (ok chaotic) nature of most websites I can see bugs arising. Oh yeah -they live in the controller folder.

Views

Live in the view directory! Whilst there is a templating language, this seems to be regarded as unnecessary and, from the manual, PHP is used directly. Fine by me, PHP is a templating language. It seems that views have to be called explicitly. Boring, I like the default that says if I have a 'post' controller I'll call a 'post' view by default, if it exists; but I realise that this is just syntactic sugar. Beyond that it's just standard PHP, front end programming, data can be passed from the controller to the view as an array (of however many dimensions) just like Smarty so that's all good. You can also call multiple views from one controller function, thus mimicing Zend layouts, but this is starting to push display logic into the controller, breaking the MVC pattern.

Models

These seem to be a bit of an after thought and are described as optional. As an ageing database hacker the model is always where I start my development and design from, as it embodies the essence of an application and forces you describe the things that you are dealing with. So one black mark there. Models implement a cut-down Active Record pattern, table aren't implemented as classes (as per Rails and Zend). I think this is a shame as you lose some of the name-based goodness that you get with the one table/class model (meta model?). On the other hand there is a quite steep learning curve when it comes to more complex queries and the Rails/Zend version.

Models are abstracted from the underlying database engine, as it's PHP4 compatible I'm guessing not through PDO. You may need to still understand the underlying database though, i.e. transactions aren't going to work on a MySQL MYISAM table.

A couple of nice features are method chaining with PHP5 to produce readable code :

$this->db->select('title')->from('mytable')->where('id', $id)->limit(10, 20);Cache control to allow you to cache part of a query string, as is result caching.

Transactions, meta data and adapter function calls are all supported.

And there's more!

There are the usual helper, plugin and library mechanisms. There are limited hooks to allow you to call your own code within the control of flow through CodeIgniter. There's an AutoLoad mechanism, although the description sounds more like a pre-load, so not on-demand like Zend. It would seem that scaffolding has been dropped. You can override the routing and perform output caching.

What do I think?

That I'm knackered now. I think I might like CodeIgniter, it seems less heavyweigh than the tZend Framework and easier to get to grips with yet still retains most of the essentials. If you are desperate for a bit of Zend (maybe Lucene Search) my guess is that you could still use it from within CodeIgniter (although I think that I'd run Solr and write a class to talk to that -if there isn't one already).

So CodeIgniter, my first framework, and maybe you won't ever grow out of it.

Sunday 9 January 2011

Pumpkin Soup

I normally try and grow a few pumpkins on the allotment for Halloween, and any spares can be made into soup for Guy Fawkes, and frozen for the rest of the winter. This year I was able to use the stock from boiling up a gammon (sometimes it's too salty) -I generally make this with apple juice as well as water and it made a good soup great.

Recipe :

1.5 lbs (675g) pumpkin flesh

2 large onions

8oz carrots

1 can chopped tomatoes

juice of a lemon

4oz red lentils

3.5 pints stock

pepper, nutmeg and salt to taste

0.5 pint milk (optional)

chop and fry the onions and carrots until the onions are soft, add the rest of the ingredients (except the milk), bring to the boil and then turn down the heat, put the lid on the pan and simmer for half an hour or so until the veg and lentils are soft. I then blend it using in the pan using one of those wand blenders. If you are planning to eat the soup straight away then you can add the milk now (if wanted, it makes the sooup taste smoother) and reheat, otherwise you can leave it until you are ready to eat it -a dash in a microwaved mug full works just as well.

Recipe :

1.5 lbs (675g) pumpkin flesh

2 large onions

8oz carrots

1 can chopped tomatoes

juice of a lemon

4oz red lentils

3.5 pints stock

pepper, nutmeg and salt to taste

0.5 pint milk (optional)

chop and fry the onions and carrots until the onions are soft, add the rest of the ingredients (except the milk), bring to the boil and then turn down the heat, put the lid on the pan and simmer for half an hour or so until the veg and lentils are soft. I then blend it using in the pan using one of those wand blenders. If you are planning to eat the soup straight away then you can add the milk now (if wanted, it makes the sooup taste smoother) and reheat, otherwise you can leave it until you are ready to eat it -a dash in a microwaved mug full works just as well.

Saturday 8 January 2011

Cooking Stock.

I'm tight, so I really like making stock as a by product from cooking other things. Generally I make it from the remains of chicken, turkey at Christmas and you can sometimes get away with ham too, although it can be too salty. Anything with bones will do though, except maybe lamb (soon after I wrote this I came across a recipe needing lamb stock).

Stock recipe make around 1 litr/2 pints :

That's it, either use it or freeze until ready, great for soups, casseroles, risottos &c.

Stock recipe make around 1 litr/2 pints :

- Chicken carcass

- 1 Carrot, cut into 2 or 3 pieces.

- 1 onion, quartered. (Or leek tops if you've been cooking leeks)

- 3 cloves

- 4 -6 peppercorns

- 3 cardamon pods (optional)

- herbs -parsley, thyme, bay rosemary, anything that you've got. (Parsley stalks are good too)

That's it, either use it or freeze until ready, great for soups, casseroles, risottos &c.

Friday 7 January 2011

Experiments With A Simple Evolutionary Algorithm (2)

Why is this interesting?

It's the way that the problem is solved. Normally with a program you work out what the problem is that needs to be solved and write code to solve that specific problem. With this algorithm less understanding is needed, you just need to work out if one child is 'fitter' than another, everything else is solved randomly.

Mama doesn't know best

In my original version of the algorithm I allowed cloning. If no child was better than the parent then the parent would be passed back to start the next generation. When I took this out, it seemed to make little or no difference to the number of generations that were taken to solve the problem.

Limitations

This is a very simple genetic algorithm, it relies solely on mutation, rather than sexual breeding, and it only breeds from one, the very best, child.

I haven't tried it yet, but I suspect that it would be easy for the program to vanish up a blind alley.

Say that, instead of a phrase, the goal was to solve a maze, or to find a route on a road map between two points, the fitness test would probably be distance from the goal. In either of the two examples the test could easily drive the program into a dead end -and it has no way of backing out of it.

How to get out of it? What happens in life? In short I haven't a clue. A few possible approaches would be :

It's the way that the problem is solved. Normally with a program you work out what the problem is that needs to be solved and write code to solve that specific problem. With this algorithm less understanding is needed, you just need to work out if one child is 'fitter' than another, everything else is solved randomly.

Mama doesn't know best

In my original version of the algorithm I allowed cloning. If no child was better than the parent then the parent would be passed back to start the next generation. When I took this out, it seemed to make little or no difference to the number of generations that were taken to solve the problem.

Limitations

This is a very simple genetic algorithm, it relies solely on mutation, rather than sexual breeding, and it only breeds from one, the very best, child.

I haven't tried it yet, but I suspect that it would be easy for the program to vanish up a blind alley.

Say that, instead of a phrase, the goal was to solve a maze, or to find a route on a road map between two points, the fitness test would probably be distance from the goal. In either of the two examples the test could easily drive the program into a dead end -and it has no way of backing out of it.

How to get out of it? What happens in life? In short I haven't a clue. A few possible approaches would be :

- Relax the breeding algorithm to let more than the very best breed, perhaps by having multiple children, their number determined by fitness.

- Sex (imagine Clarkson saying it) this would shake things up a bit.

- Backtracking -valid in AI (and politics) but maybe not in evolution.

- Competition, more starting places, so more routes to the goal.

Tuesday 4 January 2011

The Fabric of Reality

by David Deutsch

This is a frustrating book, I feel that there is some good stuff in there, but that the arguments aren’t that well made.

Deutsch’s theme seems to be that we can construct a theory of everything from four current scientific theories; the Turing Principle of a universal computer, the multiverse version of quantum physics, Karl Popper’s view of knowledge and Dawkin’s update of Darwininan evolution, and the greatest of these is quantum theory. The argument is that all these theories can be related, and that as quantum theory is the most basic -in terms of physics and mathematical description- then it is the most important. The other three theories are emergent, that is they can’t be connected directly, mathematically, to the base theory, in the way that say large parts of chemistry can be, yet they still provide good working explanations of the universe (multiverse!) as it is.

That word explanation is key to the book, he explains (following Popper) that all scientific theories are explanations of problems that we use until we find a better one. I struggled with the word problem, but if I read it more as a conundrum, or define it as something that needs an explanation it helps. An explanation is deemed to be good if it is the simplest one to fit all the known facts, reference William of Occam. So whilst we can describe planetary motion in terms of angels moving heavenly bodies about the earth so as to appear that the earth rotates about the sun, it’s much simpler just to treat the earth as moving around the sun -which we would have to, angels or no- than to worry about the motives of the angels as well.

It is also central to the book that we should treat explanations as serious and true until we find a problem with them that needs a new explanation. So Newtonian mechanics was believed in, and treated as true and the world advanced using Newton for two or three centuries until problems arose (such as the orbit of Mercury being slightly different to that predicted) that required a new explanation. That explanation was General Relativity. Deutsch argues that this is not the case with his chosen theories, although they are the best explanations that we currently have they are not taken seriously, at least in part because we just don’t like them. Quantum theory is downright weird. You either have to believe in the Copenhagen Interpretation that the world changes because you look at it; or the multiverse theory where new universes are created at every decision point, that damn cat is dead and alive. The Selfish Gene is a cold and amoral theory and doesn’t need a god. Not a worry when you’re talking about rocks and atoms, but this is personal and we have emotional problems dealing with it. The Turing principle is fine -but largely ignored, we’re too busy playing with computers to think much about them and perhaps the same is true of Popper, although it seems pretty similar to the scientific method as I was taught it in school.

So what do I think? It’s not very coherent -perhaps because three of the theories are emergent so we can’t formally tie everything together. There are links there between the theories, obvious ones between Turing and Dawkins, and computers are, in a limited sense, quantum devices anyway. So worth reading, lots to think about, but for me it lacked a defined central argument and some of the logic chopping seemed to get close to the ‘All elephants are grey, the battleship is grey, therefore the battleship is an elephant.’; although I do realise that this is because we are supposed to assume the four theories to be absolutely true and dream up ideas around that assumption, ideas that we can then test.

This is a frustrating book, I feel that there is some good stuff in there, but that the arguments aren’t that well made.

Deutsch’s theme seems to be that we can construct a theory of everything from four current scientific theories; the Turing Principle of a universal computer, the multiverse version of quantum physics, Karl Popper’s view of knowledge and Dawkin’s update of Darwininan evolution, and the greatest of these is quantum theory. The argument is that all these theories can be related, and that as quantum theory is the most basic -in terms of physics and mathematical description- then it is the most important. The other three theories are emergent, that is they can’t be connected directly, mathematically, to the base theory, in the way that say large parts of chemistry can be, yet they still provide good working explanations of the universe (multiverse!) as it is.

That word explanation is key to the book, he explains (following Popper) that all scientific theories are explanations of problems that we use until we find a better one. I struggled with the word problem, but if I read it more as a conundrum, or define it as something that needs an explanation it helps. An explanation is deemed to be good if it is the simplest one to fit all the known facts, reference William of Occam. So whilst we can describe planetary motion in terms of angels moving heavenly bodies about the earth so as to appear that the earth rotates about the sun, it’s much simpler just to treat the earth as moving around the sun -which we would have to, angels or no- than to worry about the motives of the angels as well.

It is also central to the book that we should treat explanations as serious and true until we find a problem with them that needs a new explanation. So Newtonian mechanics was believed in, and treated as true and the world advanced using Newton for two or three centuries until problems arose (such as the orbit of Mercury being slightly different to that predicted) that required a new explanation. That explanation was General Relativity. Deutsch argues that this is not the case with his chosen theories, although they are the best explanations that we currently have they are not taken seriously, at least in part because we just don’t like them. Quantum theory is downright weird. You either have to believe in the Copenhagen Interpretation that the world changes because you look at it; or the multiverse theory where new universes are created at every decision point, that damn cat is dead and alive. The Selfish Gene is a cold and amoral theory and doesn’t need a god. Not a worry when you’re talking about rocks and atoms, but this is personal and we have emotional problems dealing with it. The Turing principle is fine -but largely ignored, we’re too busy playing with computers to think much about them and perhaps the same is true of Popper, although it seems pretty similar to the scientific method as I was taught it in school.

So what do I think? It’s not very coherent -perhaps because three of the theories are emergent so we can’t formally tie everything together. There are links there between the theories, obvious ones between Turing and Dawkins, and computers are, in a limited sense, quantum devices anyway. So worth reading, lots to think about, but for me it lacked a defined central argument and some of the logic chopping seemed to get close to the ‘All elephants are grey, the battleship is grey, therefore the battleship is an elephant.’; although I do realise that this is because we are supposed to assume the four theories to be absolutely true and dream up ideas around that assumption, ideas that we can then test.

Subscribe to:

Posts (Atom)