| |

| Touch Me! |

The major player is the Kindle, it's small, light and decent looking -but at the time of writing has no touch screen in the UK. Other drawbacks are the closed format and the fact that Amazon get enough of my money anyway, a market is only a market if there is competition, otherwise it is a monopoly. There's the Sony, but that's expensive, at £129 when compared to the Kobo Touch at under £100.

The Kobo Touch is less then a ton -a mere tenner more than the non-touch Kindle, looks pretty decent -especially in the matt black, and can be picked up in the high street from Smiths or Argos. The spec is much the same as the Sony and the Kindle, With a 6 inch Pearl E-ink display, battery life around a month and 1 GB of free storage, although on the Kobe you can insert a microSD card to increase this.

The Kobe, unlike the Kindle, reads E-PUB books, so you are not restricted to the Kobo shop. I haven't had a good poke around the shop yet -but it looks well enough stocked. Kobo also supports DRM locked books via Adobe Digital Editions, which means that I can read books from the county library, I've downloaded Flashman on the March to try it out.

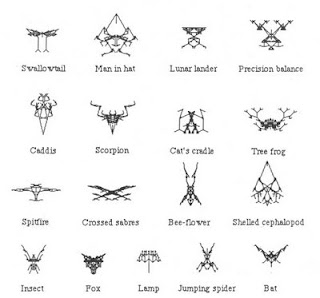

It will also cope with PDFs -so I have copied the G3 manual onto it,, and it displays well enough. This is a bit of a boon as the manual comes as a PDF, which means either printing it out or lugging a laptop if you want to refer to it away from home.

In use -it hasn't had a real test yet, but so far so good. The display is clear and pleasant, the touch screen works well, although it can be a little slow compared to the phone. The Kobo Touch is light and easy to hold in the hand, the quilting on the back is solid and feels quite pleasant. My eyes are still adjusting to it, probably because I'm holding it at a different distance and angle than I would a book, but he font, size and spacing are all selectable. It strikes me that these devices might be good for older readers (which I am rapidly becoming one of) as they are a lot lighter and handier than large print books.

The software works well and the device is pretty intuitive, for someone who hasn't used a reader before, as it should be -there isn't much to it. It is easy to bring up the table of contents for a book and navigate around it, I was half way through 'The Castle of Otranto' on my android phone, and I'm now at the same place on the eReader. If you use the Kobo Reader software on your devices, then what you're reading should be kept in sync, but I haven't tried this. Words can be checked in a dictionary and highlighted, pages can be annotated -you can even browse the web if you are desperate enough. I'd advise sticking to what the Kobo does best just read books on it, it's very good at that.

In conclusion -right now, this Christmas, in the UK- the Kobo is a Kindle killer, when Amazon ship us the Touch and open up their shop in the same way that they have in the States then it won't be, except that the Touch may well be 30% more expensive, and that's a good few books.